We were supposed to take the proverbial breather after OODA Loop. But guess what. As expected, the OODA loop keeps getting shorter. Hence, against the run of play, here is another article in a gap of two days.

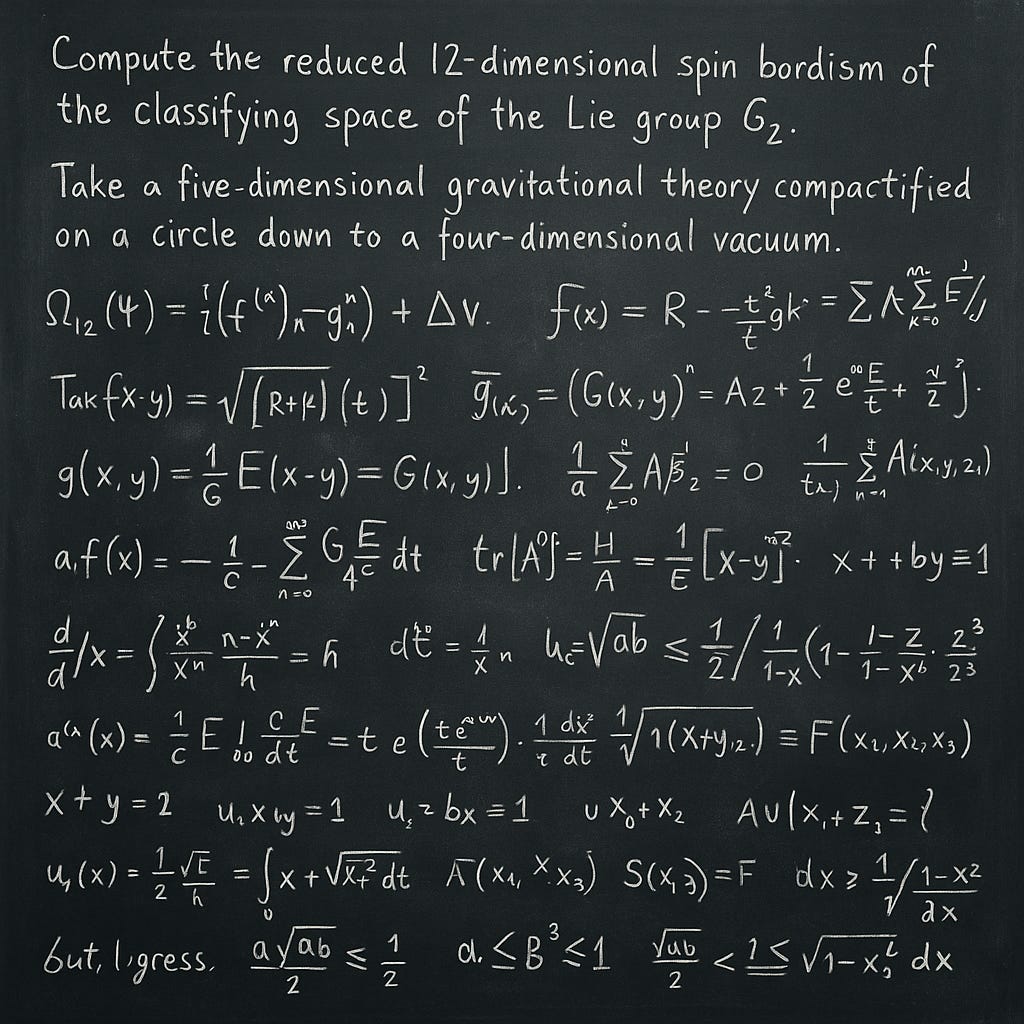

Compute the reduced 12-dimensional spin bordism of the classifying space of the Lie group G₂.

Take a five-dimensional gravitational theory compactified on a circle down to a four-dimensional vacuum.

Did you understand that?

Neither did I. They are questions. Requiring advanced mathematical concepts and theoretical physics and topology to know, grasp and apply. Inherent in the task is to understand the question too.

Elon Musk's Grok 4 solved these with ease.

Let us wrap our head around that.

Grok, the brainchild of Elon Musk. It has been at the edges of my cognitive space. I always thought it was 'frog', one especially adept at catching all the noise in X (Twitter). Grok began its journey 28 months ago, long after Open AI and Anthropic had covered Base Camp in the summit of AI. However, Elon being Elon, he threw the kitchen sink, a billion dollars' worth of high-end General Processing Units (GPUs), mass scale engineering, and garage-startup styled culture (sleeping at the office for a few hours after a 4 AM shift and beginning again), and out-competed the big names to come up with Grok 4.

Just a couple of days back, Grok 4 did something staggering.

It scored 100 percent in the American Invitational Mathematics Examination — a benchmark designed for exceptional high schoolers aiming for Olympiads.

And then, as if that wasn’t enough, it went on to score 44 percent in Humanity’s Last Exam, a 2,500-question marathon spanning physics, mathematics, chemistry, and engineering.

And while doing that, it answered the questions above — which I haven’t the foggiest about.

What does it mean?

It means that while life is going on, it is 'as usual' only on the surface.

Tectonic shifts happen and we realize they have happened only after the fact.

We did not realize STD booths would go away, till they suddenly did. We kept our land line telephone as a prized possession, a mark of our identity, till it suddenly disappeared.

In the same vein, we crossed the Turing Test a while back. And we did not realize it. For background, the Turing Test, proposed by Alan Turing, the father of cybersecurity, revolved around the question- can a machine imitate a person so convincingly that he cannot tell the difference? Modern LLMs have left Turing in the dust.

And now, Grok 4 has scored 44 percent in Humanity's Last Exam, where PhDs score around 8-10 percent. Max.

I will not even stop to tell you how to be smart and catch this wave.

The wave is getting bigger, more urgent, and faster.

Behind the wave, is this insane demand for energy. And Processing Power. Huge city size areas will be captive power sources to LLMs soon. We may think Nick Bostrom's ideas about planetary scale AI in Superintelligence (2014) are too far-fetched today; that it will be some time before AI requires the entire planetary energy, only to feed itself.

I only pause. And observe how NVidia, the GPU maker, is at a market cap of 4 trillion USD today, richer than 189 of the 194 countries in the world, behind only the US, China, Germany, India and Japan.

So, planetary energy consumption may be another short hop away. Something that might suddenly appear to be a fact, just as mobile phones today.

But. I digress.

We still have a few aces in our sleeve, in this arms race with AI. Even today, our smallest cell conducts a billion operations a millisecond, something that AI cannot do. AI also is yet to carry out planning. And it has not yet invented anything.

Musk says he expects an invention before the year is out. He says he will be 'shocked' if it doesn't happen by next year.

Seven hundred words is too short a space to explain the impact.

But enough to feel it in our bones.

📡 Transmission received? Keep the node humming—Coffee works